below is extract of information which i found in one of blog, for debatching flat files in biztalk. Sometimes you receive a batch file containing multiple records and you have to split each record into a separate message and process them individually.

This kind of splitting of a batch file is also known as debatching.

Depending on your requirement, you can either debatch an incoming message inside the pipeline or inside the orchestration.

The technique used to debatch a message inside the pipeline depends on whether the input is an XML file or a flat file.

For an XML file, you will need to use an XML disassembler, an envelop schema and a document schema, which I will discuss some other time on separate blog post / article.

Here I will show you how you can debatch a flat file.

Let us assume that we need to debatch a flat file that contains the following structure.

Field1,Field2,Field3,Field4,Field5,Field6

Field1,Field2,Field3,Field4,Field5,Field6

Field1,Field2,Field3,Field4,Field5,Field6

Field1,Field2,Field3,Field4,Field5,Field6

Field1,Field2,Field3,Field4,Field5,Field6

Field1,Field2,Field3,Field4,Field5,Field6

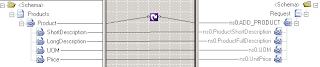

First you need to create a flat file schema that looks similar to the one shown in the figure below.

Make sure you have specified the correct child delimiters for the Root (0x0D 0x0A) node as well as the Record (,) node.

Root Node:

Child Delimiter Type = Hexadecimal

Child Delimiter = 0x0d 0x0a

Child Order = Infix or Postfix

Record Node:

Child Delimiter Type = Character

Child Delimiter = ,

Child Order = Infix

Now select the Record node and set its MaxOccurs property to 1. This is actually the key to debatching. The flat file disassembler will automatically separate each record into individual message.

Important: If you have set the Child Order Property of the Root node to Infix, make sure you have also set the property 'Allow Message Breakup At Infix Root' to Yes

Next, as you would normally do, add a receive pipeline to the project and add a flat file disassembler and set the Document schema property to the schema we created.

That’s all there is to it.

Now suppose you have a flat file that also has a header record or a trailer (footer) record or both as shown below.

FirstName,LastName,RegNo,School,City

SubjectName1,Score1

SubjectName2,Score2

SubjectName3,Score3

SubjectName4,Score4

SubjectName5,Score5

SubjectName6,Score6

SubjectName7,Score7

SubjectName8,Score8

TotalScore

You would normally create a flat file schema as shown below to parse as one single message

But for the purpose of debatching the body records, you need to create 3 separate schemas as shown below. Make sure the MaxOccurs of the Body record is set to 1.

And in the flat file disassembler, you need to set the Header schema, Document schema and Trailer schema properties appropriately.

Note: You might need to set a unique Tag Identifier property for each of the schemas, and also prefix the same tag identifier on the records as shown below, so that the flat file parser can distinguish the header, body and footer records.

HFirstName,LastName,RegNo,School,City

BSubjectName1,Score1

BSubjectName2,Score2

BSubjectName3,Score3

BSubjectName4,Score4

BSubjectName5,Score5

BSubjectName6,Score6

BSubjectName7,Score7

BSubjectName8,Score8

FTotalScore

It is possible to preserve the header record into the message context, by setting the Preserve header property of the flat file disassembler to true, so that you can use it as a header record for each of the debatched message.

To use the preserved header, you need to set the Header schema property of the flat file assembler to the same header schema you used in the disassembler. Here’s how the output will look.

HFirstName,LastName,RegNo,School,City

BSubjectName1,Score1

HFirstName,LastName,RegNo,School,City

BSubjectName2,Score2

HFirstName,LastName,RegNo,School,City

BSubjectName3,Score3

HFirstName,LastName,RegNo,School,City

BSubjectName4,Score4

HFirstName,LastName,RegNo,School,City

BSubjectName5,Score5

HFirstName,LastName,RegNo,School,City

BSubjectName6,Score6

HFirstName,LastName,RegNo,School,City

BSubjectName7,Score7

HFirstName,LastName,RegNo,School,City

BSubjectName8,Score8